Time to reveal, then slice/dice and analyze the hell out of the SC19 Student Cluster Competition results. It was a hard-fought battle with several teams vying for championship glory. Although everyone pushed hard , someone had to win, right? With that, let’s go to the results.

The Interview

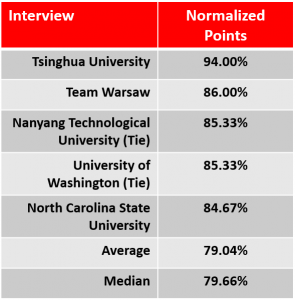

The interview portion of the competition is when judges visit each team and grill them about, well, everything.

The interview portion of the competition is when judges visit each team and grill them about, well, everything.

They ask questions about how they selected the components of their cluster and why it is configured the way it’s configured. They ask about how they tuned the system and optimized each of their applications. There are also questions about how well the team worked together and queries aimed at seeing how well everyone pulled their weight on the team.

The interview accounts for 15% of their overall score and these are easy points to pick up if the team is on the ball.

Tsinghua University picked up the lion’s share of the interview points with a score of 94%. Team Warsaw pulled into second place on the interview with a score of 86% – not too shabby, eh? Nanyang and Washington tied for third with NC State getting honorable mention for their 84.67% result.

The Benchmarks

As we reported earlier, Nanyang Technological University (Team Nanyang: The Pride of Singapore) took home the top slot on both LINPACK and HPCG. Although these only counted for 15% of their final score, it was a great start and must have given them confidence for the application portion of the competition.

University of Washington took second place on LINPACK with Team Warsaw a very close third. On HPCG, Tsinghua nabbed second place with ShanghaiTech nailing down a third-place finish.

The Applications

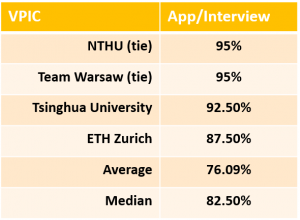

The first application up is VPIC. If you’re looking for a general-purpose simulation for modeling kinetic plasmas, then VPIC is a great choice. If you want to do it in two or three spatial dimensions, then you gotta get you some VPIC, it’s what all the kids are using.

The first application up is VPIC. If you’re looking for a general-purpose simulation for modeling kinetic plasmas, then VPIC is a great choice. If you want to do it in two or three spatial dimensions, then you gotta get you some VPIC, it’s what all the kids are using.

Our student competitors in general did fine with VPIC, but the top finishers did better than fine. As you can see on the table, NTHU and Team Warsaw both finished with identical 95% scores on this app. Tshinghua posted a very respectable 92.50%. The rest of the field recorded an average score of just over 76% with the median being higher at 82.05%.

The next application is something called Sparkle. As far as I can tell, it’s a cryptographic routine based on the block cypher SPARX, but with a wider block size and a fixed key. So, I guess, this is sort of a crypto based challenge for the students – maybe a type of blockchain-like hack?

The next application is something called Sparkle. As far as I can tell, it’s a cryptographic routine based on the block cypher SPARX, but with a wider block size and a fixed key. So, I guess, this is sort of a crypto based challenge for the students – maybe a type of blockchain-like hack?

Whatever it was, the students did pretty well on it. Nanyang just barely edged out NTHU for first place, with the Washington Huskies nipping at their heels. Just an eyelash behind Washington was ShanghaiTech, followed closely by Tsinghua. With a median score of 91.37, the rest of the pack wasn’t far behind.

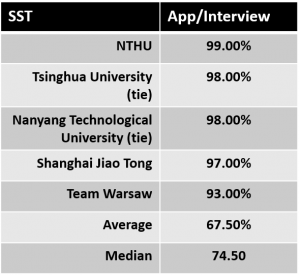

It’s a different story for SST. This application is a tool used to simulate computer system designs. Users can simulate instruction set architectures along with cache, memory, programming models and communications to see if it all holds together or collapses into a big steaming pile.

It’s a different story for SST. This application is a tool used to simulate computer system designs. Users can simulate instruction set architectures along with cache, memory, programming models and communications to see if it all holds together or collapses into a big steaming pile.

This was a tight one! NTHU managed to edge out archrival Tsinghua for top honors by only a single point with their 99% score. Tsinghua and Nanyang battled to a tie at 98%, with Shanghai Jiao Tong coming in third place with a lofty 97% result. Team Warsaw was in the thick of things with their 93%. Great job to all. The rest of the field seemed to have some problems with SST judging by the 67.50% average score. However, the median score of 74.50% shows us that teams either got this one or they didn’t – they either had high scores or suffered some real problems with SST.

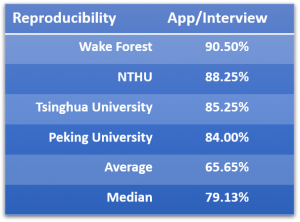

The Reproducibility Challenge is where student teams have to replicate the results of a paper presented at the previous SC conference. The paper this year? “Computing planetary interior normal modes with a highly parallel polynomial filtering Eigensolver.” Now if that doesn’t get your heart racing, I don’t know what will.

The Reproducibility Challenge is where student teams have to replicate the results of a paper presented at the previous SC conference. The paper this year? “Computing planetary interior normal modes with a highly parallel polynomial filtering Eigensolver.” Now if that doesn’t get your heart racing, I don’t know what will.

Wake Forest notches their first win on this challenge, beating NTHU by a little over two points with their 90.50% score. NTHU downed Tsinghua by three points, with Peking University scoring an honorable mention with their fourth-place score of 84%.

The field averaged only 65.65% on this task, meaning that there were some teams who had a lot of problems with it. Like SST, the median score skews higher, showing that teams scored well on Reproducibility or were out to sea on it – not much middle ground.

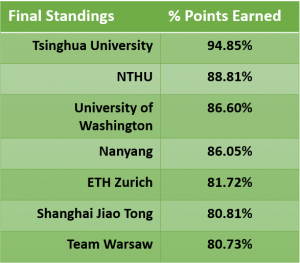

In the final round-up, it was Tsinghua pulling away from the rest of the pack with their aggregate score of 94.85%, which is a very high mark in a competition as, well, competitive as this one. This is Tsinghua’s ninth championship win in a major competition.

In the final round-up, it was Tsinghua pulling away from the rest of the pack with their aggregate score of 94.85%, which is a very high mark in a competition as, well, competitive as this one. This is Tsinghua’s ninth championship win in a major competition.

NTHU, although handicapped by a misbehaving GPU, managed to take second place with their score of 88.81%. Newbie team Washington shocked cluster competition observers with their bold 86.60% third-place finish. Just barely behind was Nanyang with a score of 86.05%. ETH Zurich and Shanghai Jiao Tong placed with scores of 81.72% and 80.73% respectively.

Once again

, we see that winning a cluster competition doesn’t mean you have to be number one in all areas. The key to taking home the gold medal is to score consistently high and score well in all areas. With the exception of LINPACK, Tsinghua managed to post good to great showings in all of the applications and built their lead on their competition-high interview score. You don’t have to be perfect; you just have to be very good. Easier said than done, right?

Our next story will be all about the numbers. We’ll be using our proprietary patented algorithms to see which teams got the most out of their hardware. Stay tuned….

Posted In: SC 2019 Denver, Latest News