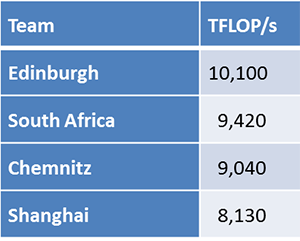

The LINPACK portion of the ISC’14 Student Cluster Competition was supposed to be routine, according to the cluster competition wise guys. Sure, some student team might set a new record, but no one was expecting the new mark to break through the 10 TFLOP/s barrier. And almost everyone expected the LINPACK crown to go to one of the Chinese powerhouse teams, or maybe the power-mad Chemnitz team, or even returning champion South Africa.

The LINPACK portion of the ISC’14 Student Cluster Competition was supposed to be routine, according to the cluster competition wise guys. Sure, some student team might set a new record, but no one was expecting the new mark to break through the 10 TFLOP/s barrier. And almost everyone expected the LINPACK crown to go to one of the Chinese powerhouse teams, or maybe the power-mad Chemnitz team, or even returning champion South Africa.

As the students finished their LINPACK runs, rumors of a new 10 TFLOP+ result started to swirl around the show floor. The scuttlebutt was that Team Edinburgh had driven what looked to be a puny cluster to an unimaginable 10.1 TFLOP/s.

In this case, the rumors were absolutely correct. Team Edinburgh pushed their four-node cluster, equipped with only 80 CPU cores and less memory (256 GB) than any other competitor, through the 10 TF LINPACK wall. In the process, they soundly topped the other competitors and secured a big slice of cluster competition glory for Edinburgh, for Scotland, and for the UK as a whole.

The secret to Edinburgh’s triumph has a lot to do with the design of their cluster. While their traditional node-CPU-memory configuration was definitely on the small side, they crammed their cluster to the gills with eight NVIDIA K40x Tesla GPUs.

While this config potentially gave them potentially a huge numeric processing punch, all of the other top finishers on LINPACK were also sporting a double brace (meaning eight) NVIDIA K40 GPUs.

While this config potentially gave them potentially a huge numeric processing punch, all of the other top finishers on LINPACK were also sporting a double brace (meaning eight) NVIDIA K40 GPUs.

The challenge for all of these teams was figuring out the best way to take advantage of their processing potential without going over the 3,000 watt power cap.

Every team with a large configuration (8 or more nodes) had to throttle down some part of their system in order to have enough wattage to fuel their GPUs. This usually meant slowing down their CPUs. But not Edinburgh. In fact, they jammed the throttle to the firewall on both their CPUs and GPUs, with nary a worry about the cap.

They were able to do this because they had a small configuration and, more importantly, because they were using liquid cooling.

Boston Limited, their hardware sponsor, gave the team highly advanced liquid-cooled gear that included a radiator that could probably handle the heat generated by the Edinburgh cluster with enough headroom for two or three more.

Using liquid cooling allowed the team to get rid of a bunch of fans (several per node), which gave them the ability to run everything flat out.

I wasn’t expecting much from the LINPACK competition at ISC’14. At the ASC14 competition, held only a few months earlier, home team Sun Yat-sen University had set a new record of 9.272 TFLOP/s. Since there hadn’t been any new hardware introductions (particularly faster CPUs or GPUs) since ASC in April, I didn’t see how anyone was going to significantly top Sun Yat-sen’s score.

But it just goes to show that if there’s a will, there’s a way. Edinburgh wanted that LINPACK record, so they figured out a way to get it. Congratulations to them, their sponsors, and everyone in Scotland.

It was also good to see the plucky South African team nail down the second highest LINPACK, and home team Chemnitz grab third place. I wasn’t surprised to see Team Shanghai in the mix for LINPACK domination, but I knew they had set their sights on the Overall Championship, which dictates a more balanced approach on the system configuration.

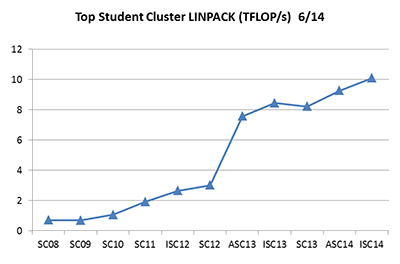

Here’s how the Edinburgh result fits into Student Cluster Competition LINPACK history.

Here’s how the Edinburgh result fits into Student Cluster Competition LINPACK history.

As you can see, they’ve significantly raised the bar for student LINPACK-iness with a increase of 8.9 over the previous record.

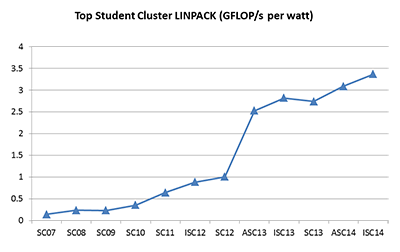

Looking at the same results, but from a GFLOP/s-per-watt perspective, the results look identical.

In fact, the flops/watt increase is exactly the same 8.9% improvement as seen in the LINPACK scores themselves.

I was a bit surprised by this. I figured that the liquid cooling would yield a better flop/watt ratio vs. the air cooled systems.

However, my analysis could be flawed. I don’t have figures for exactly how much power each team was using for the LINPACK run they submitted. All I know is that they were using an amount less than the 3,000 watt power cap.

I still think that the liquid cooled Edinburgh system was more efficient on a flop/watt basis, but I can’t prove it… much like my belief that carrot cake is not a real dessert, or my belief that I can win a fair fight with any dog in the world.

Posted In: Latest News, ISC 2014 Leipzig

Tagged: supercomputing, Student Cluster Competition, HPC, LINPACK, The University of Edinburgh, ISC 2014, liquid cooling, Boston Limited, CoolIT Systems, Results

Student_C_C

·

@coolitsystems Fantastic! We remember the @EPCCed epic LINPACK score: http://t.co/5d0VwDyHLp Start here http://t.co/Xl9N0D3eA6 and we’ll DM.

coolitsystems

·

RT @Student_C_C: SCOTLAND WON… at #ISC14, that is: http://t.co/YWsvQT9Siv @EPCCeds @bostonlimited @coolitsystems #HPC #LINPACK

Student_C_C

·

Vote? Why? Scotland already won in June. http://t.co/YWsvQT9Siv @EPCCeds @bostonlimited @coolitsystems #HPC #LINPACK #ISC14

jorge_salazar

·

MT @Student_C_C: SCOTLAND WON… at #ISC14, that is: http://t.co/DYH1bV6w3U

Student_C_C

·

SCOTLAND WON… at #ISC14, that is: http://t.co/YWsvQT9Siv @EPCCeds @bostonlimited @coolitsystems #HPC #LINPACK