One of the major differences between the recently completed Asia Student Supercomputer Challenge and the other major international competitions is the way the students get their clustering gear.

In the ISC and SC competitions, part of the task for students is to find a vendor partner and work with them to put together the best cluster for the event, and then make sure the gear arrives at the competition site. After that, it’s all about getting the cluster together and operating correctly, which can end up being quite an adventure in itself.

It’s a different deal at ASC. Inspur, the China-based multinational server vendor, is the sole hardware supplier for each team. This makes logistics easier for the teams, reduces travel/shipping costs, and also ensures that the equipment will be at the starting gate.

On the other hand, it also introduces potential problems arising from unfamiliar equipment, laying on your entire environment from scratch, and not knowing exactly how the equipment will react under stress.

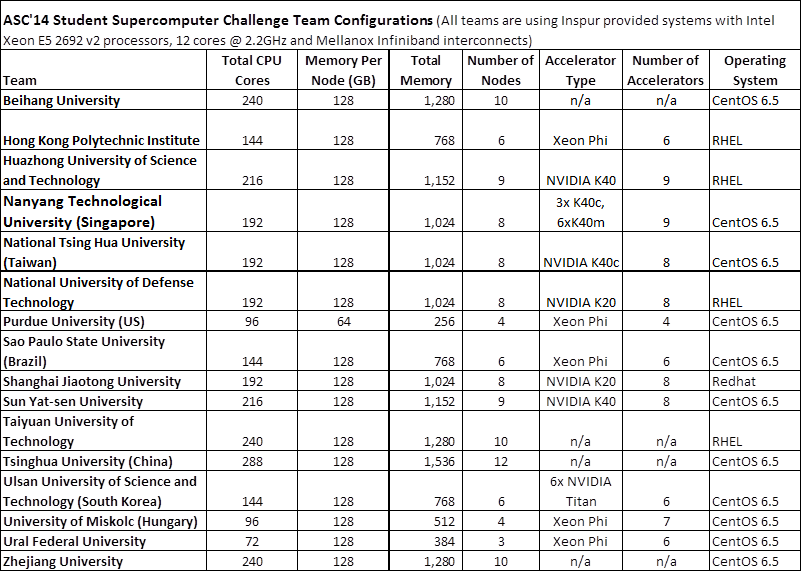

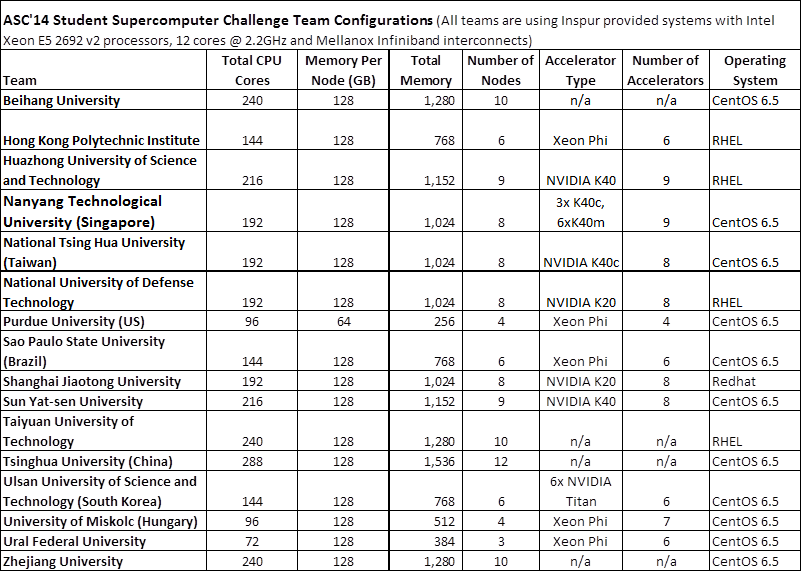

That said, here’s what the teams were sporting in Guangzhou at the 3rd Annual Asia Student Supercomputer Challenge…

(Click to enlarge)

So even though all the teams are using equipment from a single vendor, there’s still quite a disparity when you look at the configurations. Tsinghua University takes the crown for biggest traditional cluster, with a system boasting 12 nodes, a whopping 288 Xeon cores, and 1.5TB of memory. This system is a bit of a departure for Tsinghua; they typically turbocharge their competition boxes with GPUs, but eschewed them for ASC14.

Tsinghua, Taiyuan, and Zhejiang are the only schools that went old-school with their clusters; the other thirteen teams are using some sort of bolt-on accelerator.

Accelerators Galore

The bulk of the teams sported configurations of eight, nine, or ten nodes, and most of those pimped out their clusters with NVIDIA GPUs for that extra number-crunching boost. Huazhong University and Sinapore’s Nanyang Tech are each running nine GPUs, with a host of teams configured with eight.

For the most part, they’re using a single GPU per node, but there are some exceptions. For example, Team Singapore is mixing three NVIDIA K40c GPUs with an additional six K40m GPUs, hoping that the passively cooled ‘m’ part will give them an advantage on power consumption.

Team South Korea is also taking a novel approach to GPU acceleration. Rather than use the usual K20 or K40 Tesla cards, they’ve opted to drive their 6-node cluster with 144 Xeon cores and 6 NVIDIA Titan GPUs. Yeah, Titans…NVIDIA’s top gamer video cards. The specs aren’t all that different, with the Titans running with a faster clock (836 vs. 745 MHz) and providing slightly more FLOPs.

However, the K40s have a lot more memory (12GB vs. 6.2GB) and suck up less power (235 vs. 250 watts). I’m also not sure if you can get HPC programs to recognize and use a GeForce GPU without a lot of messing around. (Let me know if this is true, GPU jockeys.)

Intel Phi is also well represented at ASC14. Team Hungary (University of Misckolc) went Phi-adelic, hanging seven Phi co-processors off of their quad-node cluster. Team Russia (Ural Federal University) has the highest Phi density with two co-processors for each of their three nodes.

Phi experience comes in handy on one of the competition applications. For the first time ever in a student cluster competition, the teams run code remotely on a true supercomputer. In fact, they run the 3D-EW app on largest supercomputer in the world – the Tianhe-2, which is located just a couple of floors below them.

Each uses 512 Tianhe-2 nodes, which include 1,536 Phi co-processors, in order to show how well they can optimize the code on a massive scale. The two top teams on this app competed head-to-head in a second round where they will get to strut their stuff on 1,024 Tianhe-2 nodes.

More on the Way, Finally

In the next several days, I’ll be rolling out more much more about this competition. This will include “Meet the team” videos, a closer look at Tianhe-2 and how it’s going to be used, informative interviews, and, of course, the results.

I’ve been holding back this content because I still don’t have the full, detailed results on how the teams did on the applications. I need this information in order to discuss why the teams won, or did particularly well in the competition. It also tells me which areas presented the biggest challenges for the teams, which is always an interesting aspect of these competitions.

Deep-dive results are routinely provided to me at the conclusion of the SC and ISC competitions, but that doesn’t seem to be the case with ASC – even after repeated prodding and pinging. We’ll make the most of the subset of results we do have, and conclude from that how everything must have played out. So stay tuned…