If you’re going to win a Student Cluster Competition, you have to run real-world scientific applications at scale and make them run like a deer fleeing a forest fire. It’s all fine and good to win the HPL and HPCG benchmarks, but those are only worth 18% of their overall score. In order to put big points on the board, student teams must figure out how to run the apps on their self-designed clusters and need to optimize and tune them to extract maximum performance.

Let’s look at the applications the students were tasked with and how they did.

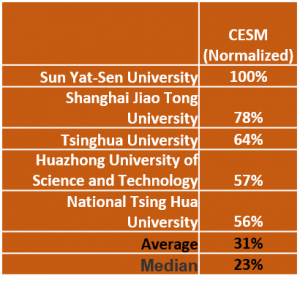

CESM, aka Community Earth System Model was the most difficult application in the ASC19 competition. In fact, it might well be the most difficult application in the history of the competitions, judging by the low average and median scores. Students dubbed it “Compiler Error System Model” because of the blizzard of compiler errors they had to fight through to get it stood up.

CESM, aka Community Earth System Model was the most difficult application in the ASC19 competition. In fact, it might well be the most difficult application in the history of the competitions, judging by the low average and median scores. Students dubbed it “Compiler Error System Model” because of the blizzard of compiler errors they had to fight through to get it stood up.

The big problem with CESM, according to the students, is that it was written back in the 1980s using FORTRAN and what they called “antique programming models.” Several teams couldn’t get it running at all on their clusters and most teams had a hard time optimizing it. Even the winning team only got 12 out of a total of 18 points on the application.

To make things worse , there isn’t a version of CESM that takes advantage of GPUs and the students that tried to adapt it found it very rough going. “This thing hotspots all over the place and it really can’t be parallelized enough to take advantage of many core GPUs.” Said one student team.

Sun Yat-Sen University had the best score among the 20 competitors. We’re not quite sure how they did it, when we went over to interview them about their result, the team didn’t know they had won CESM and they all jumped up and ran over to the results board.

Shanghai Jiao Tong University, or Team Shanghai, had the second best score, finishing a bit behind Sun Yat-Sen, but still scoring highly enough to nab the silver prize. Tsinghua took third place, just slightly ahead of HUST, who barely edged out NTHU for fourth.

As you can see from the table, the average result from all 20 teams was a woeful 31% – meaning this application was damned difficult. The median score is an even better indicator of how hard this app truly was. Ouch. Let’s turn the page.

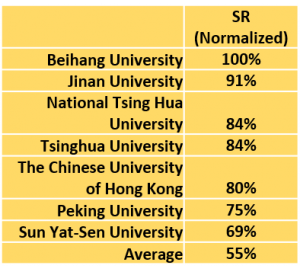

Faces: Super Resolution was the application that I thought was going to be the most difficult. In this application, the students have to build their own AI and, utilizing PyTorch, use their model to improve the resolution of a large dataset filled with blurry face photos. I figured this would tie the students in knots, but I was wrong. This isn’t to say that there weren’t a few curve balls thrown at them. First, the training data set they students used was pretty much portrait pictures – heads all aligned vertically and centered horizontally. The dataset they used in the competition? Not so much. This caused some last minute algorithm changes and a fair amount of teeth gnashing among the student teams.

Faces: Super Resolution was the application that I thought was going to be the most difficult. In this application, the students have to build their own AI and, utilizing PyTorch, use their model to improve the resolution of a large dataset filled with blurry face photos. I figured this would tie the students in knots, but I was wrong. This isn’t to say that there weren’t a few curve balls thrown at them. First, the training data set they students used was pretty much portrait pictures – heads all aligned vertically and centered horizontally. The dataset they used in the competition? Not so much. This caused some last minute algorithm changes and a fair amount of teeth gnashing among the student teams.

Beihang University, winner of the Highest LINPACK Award, took home first place on Faces SR with a perfect score. They were able to handle the different image formats given to them and achieve the highest final resolution. Jinan, a new team to student clustering, took home second place by a comfortable margin.

NTHU and Tsinghua were locked in a dogfight for the number three position, ending in a photo finish. Tsinghua edged NTHU by only .09 of a point to take third place. The Chinese University of Hong Kong took fifth place, which is a great showing for a brand new team. Congrats!

Peking and Sun Yat-Sen round out the rest of the honor roll by scoring significantly better than the rest of the field.

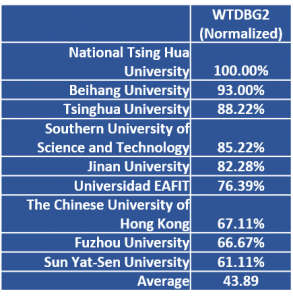

WTDBG2 is a long-read genome assembler that is about 30x faster than existing assemblers that require tens of thousands of CPU hours to assemble a human genome. If you need to assemble a de novo human genome and are pressed for time, I would heartily recommend WTDBG2.

WTDBG2 is a long-read genome assembler that is about 30x faster than existing assemblers that require tens of thousands of CPU hours to assemble a human genome. If you need to assemble a de novo human genome and are pressed for time, I would heartily recommend WTDBG2.

The students were presented with several datasets and required to finish all the workloads with high enough accuracy and a low runtime.

Students had problems early on with WTDBG2. Sure, you can get it running on a single node, but this is a cluster competition, meaning you need to get it running on your entire cluster in order to complete the challenge.

NTHU pounded home their victory with a perfect score on this app, which means they nailed every workload with high accuracy, and also ran the workloads in the least amount of time. Beihang University was a reasonably close second place with their score only 7% less than NTHU’s 100%. Both teams were running smallish clusters (five and three nodes respectively) that were packed with NVIDIA V100 GPUs.

Tsinghua took home third place on this application with their score of 88.22%. They were running a larger seven node system with 12 GPUs. SUSTech made a bold play for fourth place, taking it down with a score of 85.22%, closely followed by Jinan at 82.28%. This is noteworthy because these are both new teams, and it’s rare for newbies to score so highly in their first competition. Good job!

We also have to call out EAFIT for special attention. They put together a great WTDBG2 run and their score of 76.39 puts them well above the pack, which averaged 43.89%. The Chinese University of Hong Kong, Fuzhou University, and Sun Yat-Sen University also deserve some love for their higher than average scores.

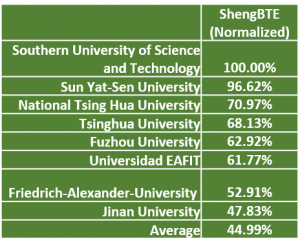

ShengBTE was the Mystery Application as ASC19. It’s a package that solves the Boltzmann transport equation. This is a useful way to figure out how physical things like heat energy or momentum change when a fluid is being transported – like in a pipe or a milk tanker truck. You can also use it to derive viscosity

ShengBTE was the Mystery Application as ASC19. It’s a package that solves the Boltzmann transport equation. This is a useful way to figure out how physical things like heat energy or momentum change when a fluid is being transported – like in a pipe or a milk tanker truck. You can also use it to derive viscosity

, thermal conductivity, and electrical conductivity. It’s pretty handy.

Looking at the leaderboard, we see a stunning upset. New team SUSTech topped all competitors on ShengBTE with a score of 100%, with only Sun Yat-Sen’s 96.62% presenting a serious challenge. It’s highly unusual to see a brand new team beat the upper echelon competitors on any application in their first year. Great job, SUSTech!

NTHU and Tsinghua were, as usual, locked in a tight race – this time for third place. NTHU edged out Tsinghua by a scant 3% or so to take home the prize. Fuzhou and EAFIT were similarly battling for fifth place, with Fuzhou winning by a nose. FAU, the German team, held off Jinan for sixth place, giving Jinan a solid seventh place finish.

Next up, we’ll take a look at some of our favorite moments from ASC19 and, of course, reveal the winners of the various prizes and show you how they won. Stay tuned….

Posted In: Latest News, ASC 2019 Dalian