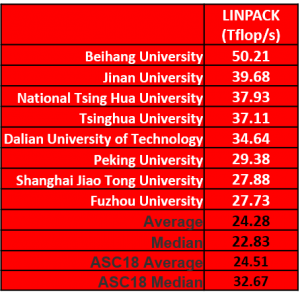

Beihang University topped the 20-team field by a comfortable margin to snag the Highest LINPACK award at ASC19. The team turned in a score of 50.21 Tflop/s, which was within spitting distance of the all-time LINPACK record of 56.51 Tflop/s recorded at SC18.

Team Beihang’s cluster configuration was the key to winning the LINPACK Award. The team put together a ‘small is beautiful’ three node cluster that was jam packed with 12 NVIDIA V100 GPUs, four per node. They also had 384 GB memory per node and lashed it together with a Mellanox FDR InfiniBand interconnect.

Team Beihang’s cluster configuration was the key to winning the LINPACK Award. The team put together a ‘small is beautiful’ three node cluster that was jam packed with 12 NVIDIA V100 GPUs, four per node. They also had 384 GB memory per node and lashed it together with a Mellanox FDR InfiniBand interconnect.

When you’re going for the LINPACK award, the fewer the nodes the better, as long as you have a lot of GPUs. Beihang played this strategy almost perfectly and took home the LINPACK trophy as a result.

First time team Jinan nabbed second place with a score of 39.68 Tflop/s. Team Jinan was driving a smallish five node cluster with 10 V100 GPUs. Jinan was punching above their weight in that they beat teams with higher GPU counts

, good job, Jinan.

Taiwan’s NTHU grabbed third in a photo finish with namesake Tsinghua University. NTHU was running five nodes with a whopping 16 GPUs while Tsinghua spread 12 GPUs over their seven node system.

Home team Dalian University of Science & Technology makes an early appearance on the leader board, taking fifth place with their score of 34.64 Tflop/s. They configured up a four node system running eight GPUs.

Honorable mention honors go to teams Peking, Shanghai, and Fuzhou for breaking the 25 Tflop/s hurdle. These teams had eight , seven, and six nodes respectively. Fuzhou was sporting an awesome double brace of 16 NVIDIA V100 GPUs while Peking brought 12 GPUs to the dance. However, Shanghai only had a total of six GPUs, but were still able to achieve a result that put them into the upper echelon of LINPACK scores. Nice work, Shanghai.

The average LINPACK score this year was virtually the same as the 2018 ASC average. Median LINPACK scores were significantly this year relative to ASC18. The reason for this is in large part due to lower GPU counts this year. The median number of GPUs per cluster at ASC19 was only four, compared to eight in 2018 – that’s a huge difference. In addition, students were configuring more CPUs in order to tackle a field of HPC applications that weren’t particularly GPU-centric.

Next up we’ll take a look at the HPCG benchmark scores, stay tuned…..

Posted In: Latest News, ASC 2019 Dalian