University teams competing at the SC’18 Student Cluster Competition faced a grueling slate of applications and tasks. Students have worked for months on end to design their cluster, tune it, and master the benchmark and real-world HPC codes set forth by competition organizers. Let’s take a look at the results:

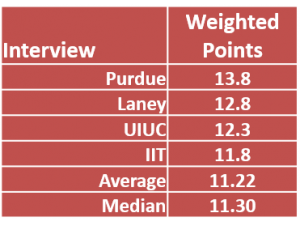

Students were judged on interviews conducted by HPC experts to see just how well they understood the applications and their cluster. Typically, the judges ask the competitors about they steps they took to configure and optimize applications to achieve maximum performance.

In my experience, the teams that do the best on the interview portion of the competition are teams that can show the judges they have a deep understanding of the apps and how they interact with the hardware – demonstrating that they’ve learned how to scale the applications and increase overall performance.

The all-female team from Purdue took home first place on the interview with newcomer Laney College from Oakland, CA, coming in second. Illinois teams UIUC (University of Illinois Urbana-Champaign) and Illinois Institute of Technology (IIT) were locked in a wrestling match for third place, with UIUC coming out on top.

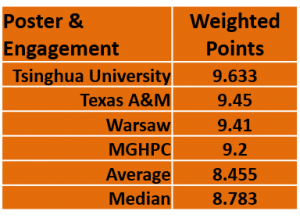

Students were also required to design and render out a poster that describes their team and approach to the competition. Tsinghua, Texas A&M and Warsaw were at the top of the charts, with Team Boston earning an honorable mention for scoring significantly higher than the rest of the field.

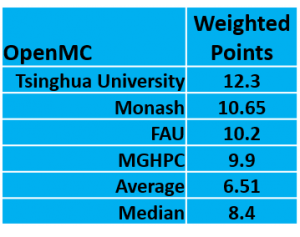

OpenMC is a Monte Carlo particle transport simulation code that is focused on what is called “neutron criticality” calculations.

Since OpenMC is a Monte Carlo simulation, it tries every permutation to find a solution, and, once the problem is converged, the solution is known as well as the average behavior model wide. But because the model is repeatedly random sampled, Monte Carlo in OpenMC is grueling from a computational perspective.

Since OpenMC is a Monte Carlo simulation, it tries every permutation to find a solution, and, once the problem is converged, the solution is known as well as the average behavior model wide. But because the model is repeatedly random sampled, Monte Carlo in OpenMC is grueling from a computational perspective.

Tsinghua University topped the field on OpenMC with 12.3 weighted points. Ausssie team Monash came in second with 10.65. FAU and Team Boston (MGHPC) were virtually tied for third, with FAU pulling ahead by an eyelash for the W.

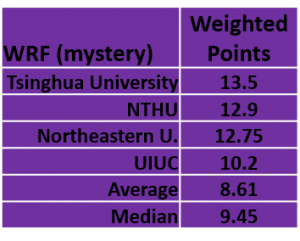

WRF: You want to predict the weather or forecast the future of earth’s climate? Then you need WRF, the premiere climate modeling software. Feed it idealized or real data and it’ll crunch out either operational forecasts or long-range climate simulations. It’s been a staple of Student Cluster Competitions, used at many events, and is the Mystery Application for the SC18 competition.

WRF: You want to predict the weather or forecast the future of earth’s climate? Then you need WRF, the premiere climate modeling software. Feed it idealized or real data and it’ll crunch out either operational forecasts or long-range climate simulations. It’s been a staple of Student Cluster Competitions, used at many events, and is the Mystery Application for the SC18 competition.

Tsinghua University has seen WRF several times in the past, as has Taiwan’s NTHU, so it’s not surprising that they took first and second place. Northeastern was a close third with UUIC taking an honorable mention slot for their score of 10.2.

Hovorod is a TensorFlow tool developed by Uber to increase the parallelism and efficiency of their TensorFlow calculations. Before Horovod, the company found that they were barely able to utilize half of the 128 GPUs in their test system. Horovod is a software mechanism that uses a communications  architecture where each node communicates with two of its peers in a ring , so that it will optimize available network bandwidth. The performance impact of Hovorod is profound. Using TensorFlow benchmarks that were modified to utilize Hovorod, scalability to 88% of GPU capacity and delivering nearly twice the performance as standard TensorFlow.

architecture where each node communicates with two of its peers in a ring , so that it will optimize available network bandwidth. The performance impact of Hovorod is profound. Using TensorFlow benchmarks that were modified to utilize Hovorod, scalability to 88% of GPU capacity and delivering nearly twice the performance as standard TensorFlow.

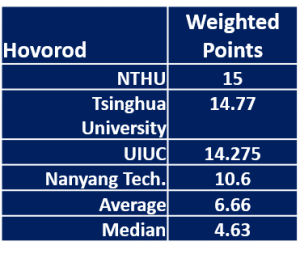

From the results, it looks like NTHU bonded with Hovorod, nabbing 15 points on this task. Tsinghua and UIUC weren’t far behind with scores of 14.77 and 14.275 respectively. Nanyang makes a very rare stumble, and only takes home 10.6 weighted points on this app.

Reproducibility: This task has been around for only a few years. It requires the student teams to set up a computational problem from a recently submitted research paper and then prove whether the original calculations are replicable. In the case this year

Reproducibility: This task has been around for only a few years. It requires the student teams to set up a computational problem from a recently submitted research paper and then prove whether the original calculations are replicable. In the case this year

, the students run a simulation of the 2004 Sumatra-Andaman earthquake. The challenge has two components, the first is an interview with each of the teams to evaluate their understanding of the task. The second is a report outlining how the teams got their results and to compare their results to the original paper.

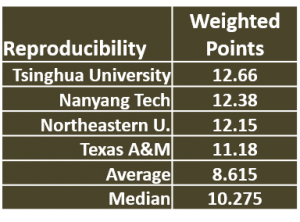

Once again, we see three teams closely vying for the top slot on this app. Tsinghua managed to pull out number one by a tiny margin over Nanyang, and, in turn, Nanyang barely manages to protect number two from Northeastern University. Texas A&M makes another appearance on the leaderboard with their fourth-place finish.

Next up we reveal the Overall Champion and Gold Medal winner of the ISC’18 cluster comp. Stay tuned….

Posted In: Latest News, SC 2018 Dallas