The ISC Student Cluster Competition is in the record books and now it’s time to dive into the numbers and see what happened. This time, we’re going to present the results day-by-day and show you how the lead changes hands over time.

The ISC Student Cluster Competition is in the record books and now it’s time to dive into the numbers and see what happened. This time, we’re going to present the results day-by-day and show you how the lead changes hands over time.

Day 1: HPCC, HPCG

The first day is devoted to industry standard benchmarks. HPCC (also known as the HPC Challenge) is a suite of different benchmarks designed to exercise every part of a cluster. It includes the following components.

- HPL (LINPACK) tests floating point execution

- DGEMM tests the double-precision floating point

- STREAM pushes and tests memory bandwidth

- PTRANS tests simultaneous processor to processor communication

- RandomAccess measures the rate of random updates of integer memory

- FFT measures the double precision floating point execution of a complex Discrete Fournier Transform

- Communication bandwidth/latency is measured by a series of tests to measure latency and bandwidth of a number of simultaneous communication patterns

HPCG is a new-ish benchmark from Jack Dongarra, the author of the seminal LINPACK test. HPCG more closely conforms to the types of HPC processing we see today.

HPCG is a new-ish benchmark from Jack Dongarra, the author of the seminal LINPACK test. HPCG more closely conforms to the types of HPC processing we see today.

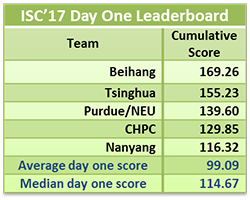

As we can see on the table, the leaders in the competition were fairly close together at the end of the first day. Beihang rode a near perfect HPCC run to lead the competition. While Tsinghua did score a 100 per cent on HPCG, they stumbled a bit on HPCC, which landed them in second place on Day 1. Purdue/NEU absolutely nailed HPCG, garnering the highest score, but also fell back a bit on HPCC, landing them in third overall for the day.

CHPC and Nanyang hung in with the top tier, still in striking distance on Day 1.

Day 2: Fun with LAMMPS, FEniCS, and MiniDFT

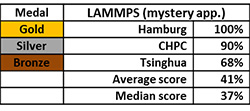

Day 2 was the first day of the application runs, with students running LAMMPS, the mystery application. LAMMPS stands for Large-scale Atomic Molecular Massively Parallel Simulator, which simulates particles in liquid, solid, or gaseous states.

Day 2 was the first day of the application runs, with students running LAMMPS, the mystery application. LAMMPS stands for Large-scale Atomic Molecular Massively Parallel Simulator, which simulates particles in liquid, solid, or gaseous states.

Our pals at University of Hamburg did an exemplary job on LAMMPS, hitting on all cylinders in topping all of the other teams. CHPC grabbed second on this app, only 10 per cent behind the leader. The scores tailed off quickly, with Tsinghua posting a 68 per cent mark. The average score was pretty low for such a well-known application.

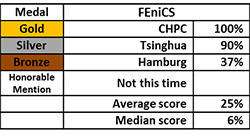

Students also ran FEniCS, which solves partial differential equations. It gives users the ability to quickly translate scientific models into efficient fine element code. This was a hard application for the teams, the most difficult in the competition according to the scores. This app really threw students for a loop.

As you can see on the table, CHPC owned FEniCS, closely followed by Tsinghua. While Hamburg’s score of 37 per cent may not seem like something to write home about, you have to realize that they beat eight other teams with that score, so there.

As you can see on the table, CHPC owned FEniCS, closely followed by Tsinghua. While Hamburg’s score of 37 per cent may not seem like something to write home about, you have to realize that they beat eight other teams with that score, so there.

The average and median scores were both very low, with several teams turning unable to complete the application within the allotted time. The organizers did a great job by selecting FEniCS, it really gave the students a mountain to climb. Of course, that’s one of the points of these competitions, to force the students to learn new HPC applications and how to optimize them.

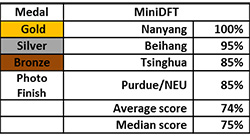

The other application for Day 2 was MiniDFT, a plane-wave density functional theory mini-application for modelling materials. The students were first tasked with optimizing the code before the competition, which was then posted publicly.

Then the students were given the opportunity to look at their competitors’ codes, and tasked with optimizing the code again for a run during the ISC’17 event. Stealing, borrowing, or copying code from other competitors was fair game – the goal was to come up with the best optimization overall.

Then the students were given the opportunity to look at their competitors’ codes, and tasked with optimizing the code again for a run during the ISC’17 event. Stealing, borrowing, or copying code from other competitors was fair game – the goal was to come up with the best optimization overall.

If you watched our video with Nanyang (here), you’ll remember that I specifically asked them about MiniDFT and if they had “borrowed” any code from the other teams.

The guy in charge of the application said that he looked at the other team codes, but was comfortable that his version was the best. It turns out he was right, but by only a 5 per cent margin. Everyone should have been stealing his code for this application, since it proved to be the winning combination.

Beihang wasn’t far behind with their 95 per cent score. Tsinghua and Purdue/NEU were essentially knotted up at 85 per cent (only fractions of a point separating them) on MiniDFT.

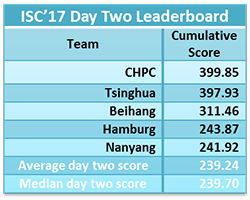

The Day 2 results from the application runs changed the leaderboard quite a bit. Hamburg had the highest score on LAMMPS, which elevated them into fourth place on the big board.

The Day 2 results from the application runs changed the leaderboard quite a bit. Hamburg had the highest score on LAMMPS, which elevated them into fourth place on the big board.

CHPC scored uniformly high on LAMMPS and MiniDFT, but topped all other competitors on FEniCS, which gave them the top spot for the competition at the end of Day 2.

Tsinghua was in second place by the narrowest of margins, with Beihang a little farther back in third – but still within striking distance.

Day 3: TensorFlow & Interview

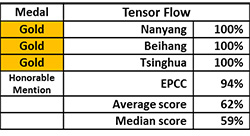

The last application of the contest was TensorFlow, with a dataset designed by one of the competition organizers. He was quite surprised to see three teams score a perfect 100 per cent on his dataset – he thought it was a lot harder than it turned out to be.

The last application of the contest was TensorFlow, with a dataset designed by one of the competition organizers. He was quite surprised to see three teams score a perfect 100 per cent on his dataset – he thought it was a lot harder than it turned out to be.

Nanyang, Beihang, and Tsinghua pwned him outright, with honorable mention going to EPCC for their 94 per cent finish. The rest of the field did OK too, with an average score of 62 per cent. Message to TensorFlow judge: try harder next time.

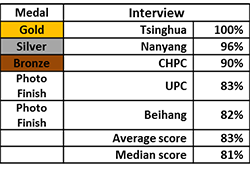

The final activity is the interview, which counts for 10 per cent of the total score for each team. This isn’t a quick and easy “Hey, did you learn anything?” exercise. Judges  ask teams why they decided on their particular cluster configuration. They ask about specific steps teams took to optimize each application. Several questions center on power usage and what the teams did to maximize their performance per Watt.

ask teams why they decided on their particular cluster configuration. They ask about specific steps teams took to optimize each application. Several questions center on power usage and what the teams did to maximize their performance per Watt.

Tsinghua turned in an amazing interview performance. They had built a presentation that outlined their cluster configuration and discussed every application. Their presentation then showed the steps the team took to optimize each application, the speed-up achieved through each step, and then a summary of their results.

Nanyang and CHPC did a bang-up job on their interviews as well, scoring 96 and 90 per cent respectively. Beihang topped the rest of the field with their score of 82 per cent.

The Reckoning: Final Results

The final finish is in the table to the left. Tsinghua took the top spot by earning 88 per cent of the total possible points. The team did particularly well on the interview, TensorFlow, and FEniCS.

The final finish is in the table to the left. Tsinghua took the top spot by earning 88 per cent of the total possible points. The team did particularly well on the interview, TensorFlow, and FEniCS.

This is their second major win of the Student Cluster Competition season – they grabbed the Overall Championship at ASC17 earlier this year. Right now, this team is on track to win the Triple Crown of student clustering – they just need a win at SC17 in November to complete the hat-trick.

CHPC finished just behind Tsinghua with 79 per cent of the total points. This was a very solid CHPC team and many were betting on them to successfully defend their ISC16 championship. The team did particularly well on FEniCS, dominating the rest of the field, and did well on the interview portion of the competition.

Beihang takes home the third place trophy after a hard-fought tourney. They were tops on the Day 1 leaderboard, but found that lead slowly slipping away as the competition continued. They turned in solid performances, usually in the top tier, but it wasn’t quite enough to get them over the top this time. But the team is improving and I expect them to make a bid for the top slot next year.

Nanyang is another team that has shown a lot of improvement over the past few years. They just missed a third place finish at ISC17 and they’ve qualified for the SC17 competition – so they have another chance at glory.

UPC made a name for themselves in the competition in two ways, the first was by bringing yet another massive ARM-based system to the competition, and the second by bringing home the Fan Favorite award to Barcelona; nice work for the Spaniards.

Looking ahead…

The ISC’17 Student Cluster Competition was record setting, with new highs set in LINPACK and HPCG. We’re seeing more AI-type applications, with Paddle Paddle at the ASC17 competition and TensorFlow in this competition.

The next, and final, event in the Student Cluster Competition season will be in Denver at SC17 this November. SC17 will have a 16 team field which will include Tsinghua, the ISC’17 Champion, plus other ISC’17 participants, including Nanyang, Northeastern University, and LINPACK winning FAU. We’ll be covering it like a first-time parent covering up their baby, so stay tuned.

Posted In: Latest News, ISC 2017 Frankfurt

Tagged: supercomputing, Student Cluster Competition, Tsinghua University, Centre for High Performance Computing, Beihang University, Nanyang Technological University, Universitat Politecnica de Catalunya, ISC 2017, Results