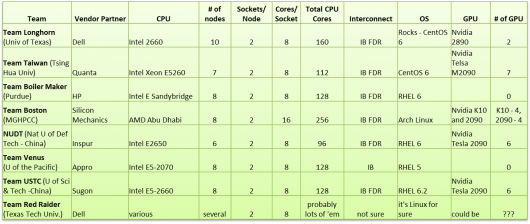

At last, I can reveal the final configurations of the systems the SC12 Student Cluster Competition(SCC) warriors brought to Salt Lake City last week. OK, I could have revealed them last week, but I was too busy at the show to write the story. That said, the chart above shows the gear they were running.

First, kudos to all of the sponsors who generously provided so much equipment at no cost to the cluster crown competitors. (There isn’t a real crown.) How about next year, all of you chip in and we get an actual Cluster Crown that can go to the winning team? Maybe make it out of old motherboards and chips and stuff?

So, what’s noteworthy on the builds above? First, it’s too bad that I don’t have the total memory figures in the table above. Memory per node and per core is important and might explain some of performance differences that we’ll see in the final results. But that aside, there’s plenty of other interesting info in the table.

We see that at least five of the eight teams used GPUs this time around. Last year, about half the teams were using accelerators, and they dominated the field on both LINPACK and application performance. Team Longhorn used GPUs for the first time this year and was excited about the performance boost they’ve seen vs. the traditional system they ran last year. Team Venus was thinking about using GPUs, but decided against it due to their inexperience with clusters and HPC in general. Purdue was also pondering the whole accelerator issue, and I think this will be their last non-hybrid SCC cluster.

Team Boston, sticking with their tradition of bringing anything that will run code, brought two flavors of GPUs. They also looked at running ARM processors and even talked about mixing some Phi (Intel Phi, that is) into their cluster cake. While their configuration sports 256 cores, I don’t think they were able to use them all during the competition due to power considerations. Teams are capped at 26 total amps (110 watts), and I’m pretty sure that if Boston fired up all that hardware at once, they’d be well past that limit.

The Chinese representatives, Teams NUDT and USTC, both brought six NVIDIA 2090s. NUDT had the lowest node count in the competition and yet the highest LINPACK score at just over 3 Teraflop/s. Their countrymen from USTC finished second with 2.73 Teraflop/s.

And then there’s Team Red Raider from Texas Tech University. Every year at the SCC, there’s a team that seems almost cursed. Deliveries fall through, things break, software doesn’t perform like it did in the lab, etc. This year, that team is Team Red Raider.

They had big plans for SCC12. First, they designed their own immersion liquid cooling mechanism, using parts they scrounged or built themselves. They used an automobile transmission radiator for cooling and a boat bilge pump to move the liquid through flexible plastic piping to their homegrown server deep-fryer enclosure. They did a lot of custom engineering and shade tree fixes in order to make this design extremely efficient. (For more on the Texas Tech cluster, click here.)

The only problem was that their system didn’t show up in Salt Lake City. It was somehow stuck in Mexico, of all places, and couldn’t be shipped in enough time to make the contest. At one point, the Tech test system was going to be shipped to the show, but that fell through at the last minute. So the Red Raiders were left with laptops, a big canister full of mineral oil, and a huge sense of disappointment. But that’s not the end of the story…

When the rest of the field heard about the Red Raider problem, the teams from UT and Purdue stepped in and contributed some gear to get Texas Tech back in the game. Team Boston also contributed a couple of nodes to the effort. Meanwhile, both Dell and Mellanox came up with some servers to help fill out the rest of the cluster. The Texas Tech team busily incorporated all of this disparate gear into some sort of clustery order and managed to complete all of the scientific applications. Their attitude stayed upbeat, and they made the best of a bad situation.

Their configuration changed too quickly to keep up with, and I don’t think the final configuration is documented. But they ran what they had and put in a respectable showing. I hope they get the chance to compete next year.

Even though their cooling solution was homegrown, it was clear that they put an enormous amount of thought into it, and it would have consumed considerably less power than previous immersion solutions we’ve seen at SCC. According to the team, they were able to achieve a 4.0 teraflop/s LINPACK score in their lab under competition conditions. This would have landed them the LINPACK award for sure; who knows what they could have done on the scientific apps? But everyone’s system does better in the lab than in the real competition – so we need the Red Raiders to bring their stuff to SC13 in Denver and prove it.

More Student Cluster Competition coverage to follow. In upcoming blogs, we’ll talk to the teams on the final day of the competition, learn more about the LittleFe Traveling Salesman challenge, and attend the gala awards luncheon too.

Posted In: Latest News, SC 2012 Salt Lake City

Tagged: supercomputing, SC 2012, Student Cluster Competition, SCC, University of Texas, Texas Tech University, USTC, NUDT, Boston University, University of the Pacific, Purdue University, National Tsing Hua University, Configurations