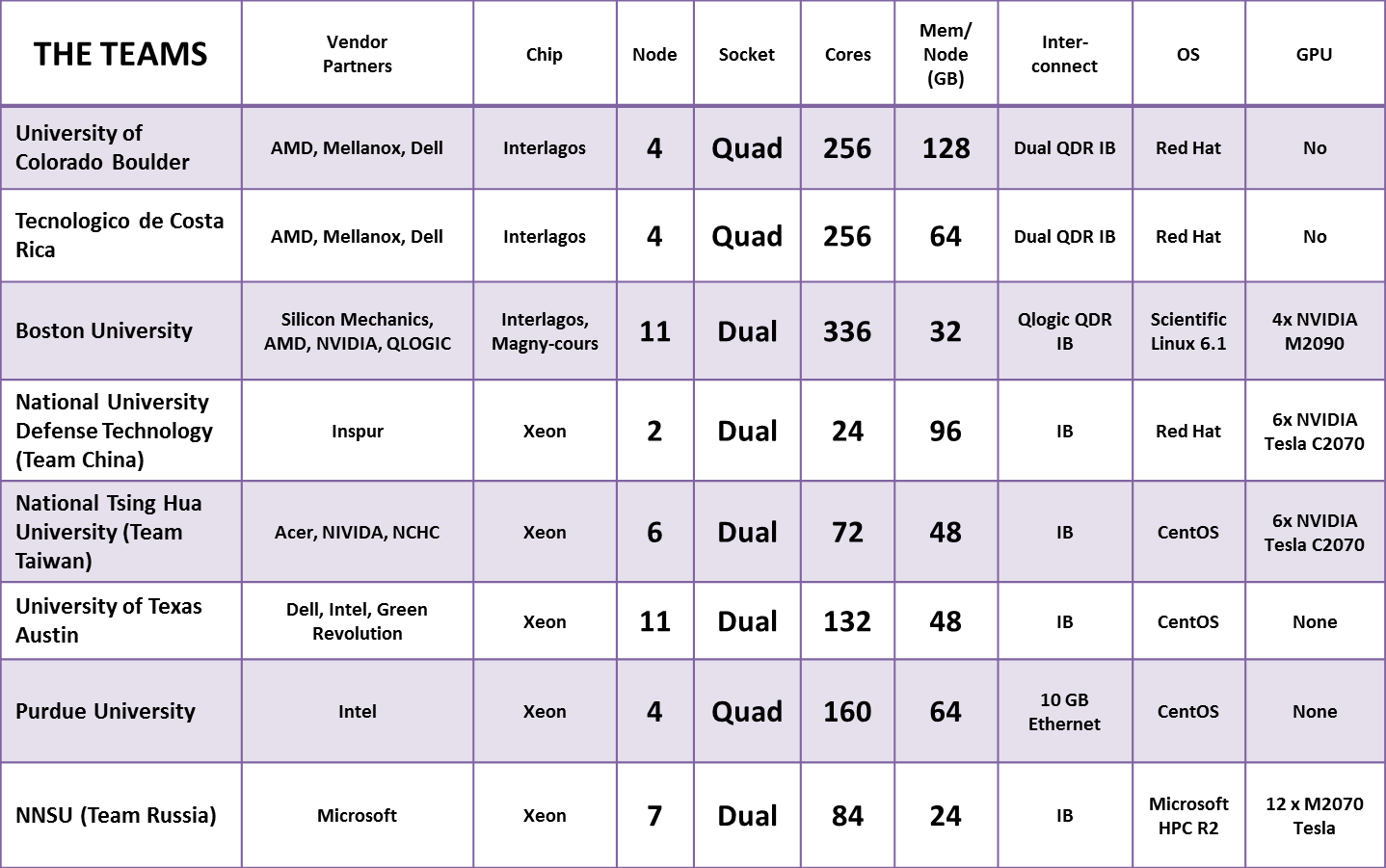

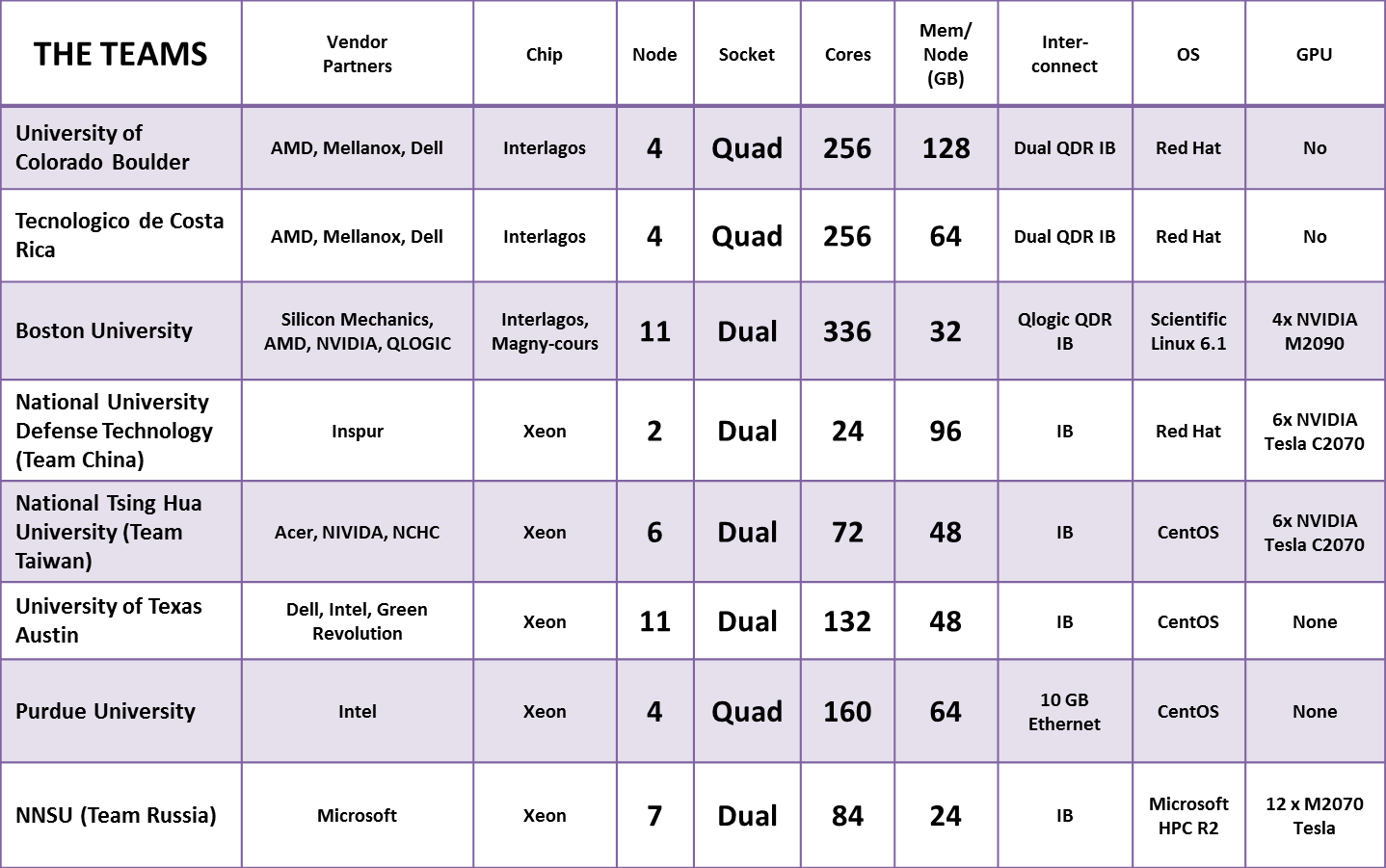

The hardware configurations for the 2011 SC Student Cluster Competition have been released, and there are quite a few surprises. First, the most surprising surprise: the University of Texas Longhorn team has brought the first liquid cooled system to the big dance.

The hardware configurations for the 2011 SC Student Cluster Competition have been released, and there are quite a few surprises. First, the most surprising surprise: the University of Texas Longhorn team has brought the first liquid cooled system to the big dance.

They’ve partnered with Green Revolution to put together an 11-node, 132 core, Xeon-fueled cluster that is completely immersed in a vat of mineral oil. The oil flows around the large pizza box server boards, then through a radiator where the heat is dispersed into the air via a large fan.

Team Texas believes that the move to liquid gives them anywhere between 5-15% more overall compute power while keeping them under the hard 26 amp ceiling. I’ve shot plenty of video of the system and the team, including swapping out servers, so check back for updates.

NUDT (Team China) has brought a much more compact design that is more reliant on GPUs than any other – much like the chart-topping Tianhe supercomputer that their institution built last year. The Team China cluster uses a grand total of four Xeon processors (24 cores total) to act as traffic cop for six NVIDIA C2070 Tesla GPU accelerators.

What’s interesting is that most of the teams have configured much more hardware and then throttled it down to meet the 26 amp hard limit, while China seems to have configured ‘just enough’ hardware to approach the limit.

Whether they’ve been able to optimize the scientific applications to run well on GPUs is also an open question. Most of the other teams sporting accelerators have found this to be a bit of a barrier on some of the apps and have, as a result, made sure they’re packing plenty of traditional x86 chippery to handle the non-GPU friendly workloads.

Colorado and Purdue, the most veteran teams in the competition, have taken an appropriately old-school approach to their clusters. Their daddies didn’t use any fancy accelerators, their granddaddies didn’t use ‘em – so they’re not using them either. As the teams explained to me, they didn’t see much advantage to accelerators, since not all of the workloads could take advantage of the extra number-crunching power.

But they are using plenty of traditional iron. Colorado is sporting sixteen AMD 16-core Interlagos processors, giving them 256 cores on four quad-socket nodes. Purdue is using Intel’s 10-core (deci-core?) Xeon to build a 160-core cluster on four nodes. We’ll post closer looks at both teams soon.

Returning champion Team Taiwan has worked with sponsors Acer and NVIDIA to design a hybrid system with 72 Intel Xeon cores plus 6 Tesla GPU accelerators. GPUs are a new wrinkle for the Taiwanese; their award-winning 2010 cluster utilized traditional x86 processors. Like the other GPU-wielding teams, the key to winning will be how much extra bang for the watt they can pull out of the specialty chips.

The Boston University Terriers bring more compute cores – 336 – than any other team. Their cluster relies on 16-core AMD Interlagos for x86 compute power, but they’ve also added four NVIDIA Tesla cards to the mix. They certainly can’t run all of this gear and stay under the 26 amp limit, so the question will be how much (and how well) they can gear the cluster down to run fast without gulping too much power.

Team Russia, in their second SCC appearance, has brought a cluster that’s composed of seven nodes with 84 Xeon x86 cores and a grand total of 12 NVIDIA Tesla cards. This makes them the most “GPU-riffic” team in the competition by a factor of two. Team sponsor Microsoft has helped the team with the hardware and software optimizations. If the Russian bear can take advantage of all that GPU goodness, then they have a good shot at taking at least the LINPACK award. Time will tell.

The Costa Rica Rainforest Eagles lost their sponsor at the last minute. Disaster was averted as the HPC Advisory Council stepped in to sponsor the team, supplying them with all the hardware they need to compete. And it’s top-shelf gear too, with four quad-socket Interlagos nodes sporting 256 cores – which puts them in the mix with the other teams. Gilad Shainer, HPC Council chair, even found the team a couple of GPU nodes, but they probably won’t utilize them given that it wasn’t part of their original plan.

The SCC hardware configurations this year can be summed up with one word: Diverse. Actually, two words: Wildly Diverse. Fully half the teams are using some sort of GPU acceleration, up from only two last year. We even have Texas with their attention-grabbing mineral oil deep-fried nodes. (Or compute hot tub?) We’ll see how it all works out as the teams begin their first competition workload: LINPACK.

We’ll have full event coverage plus updated odds charts (the betting window is almost closed), video interviews, and overviews of each team. Check back often.